Computers changed the world, but they were missing something . . .

When the microprocessor was invented in 1972, the US economic productivity was at a 40 year low. Computers became popular in businesses and homes in following years, and what came next radicalized the economy and business forever. Computers allowed businesses newfound abilities for number crunching, data saving, and word documentation.

While computers are revolutionary in their ability to compute repetitive calculations and follow complex programmed instructions, they have never been sufficient at pattern recognition. This makes image and speech recognition extraordinarily difficult for ordinary computers, which is why it took until 2018 to unlock your iPhone using your face and why telephone chatbots are so frustratingly stupid.

Pattern Recognition

Recognizing commonalities from different information sources is incredibly important. It allows humans to make an array of predictions ranging from “If I study more, I’ll do better on the exam” to “if I don’t look before crossing the road, I could get run over.” Human brains have evolved to make predictions like these, however, our limited bandwidth only allows us to consider a limited number of factors. For instance, a prediction such as “scale-trading investment patterns will lead to an increased chance of a financial meltdown” requires more information sources than the common human brain can effectively store.

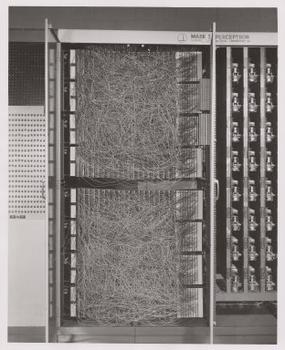

In the late 1950’s, a psychologist named Frank Rosenblatt presented the Mark 1 Perceptron machine to the US Navy. The device, just under 20,000 pounds, was named after the perceptron algorithm created by Rosenblatt which allowed the machine to recognize images. While expected to “walk, talk, see, write, reproduce itself and be conscious of its existence”, the machine was unfortunately only able to recognize images of simple shapes, like a triangle. The machine’s poor performance was due to limitations of computing power at the time, and while the excitement around the machine quickly died, Rosenblatt’s research came to be foreground of all artificial intelligence research.

The Mark 1 Perceptron, custom packaged within an IBM 704 Computer

The Perceptron 9000

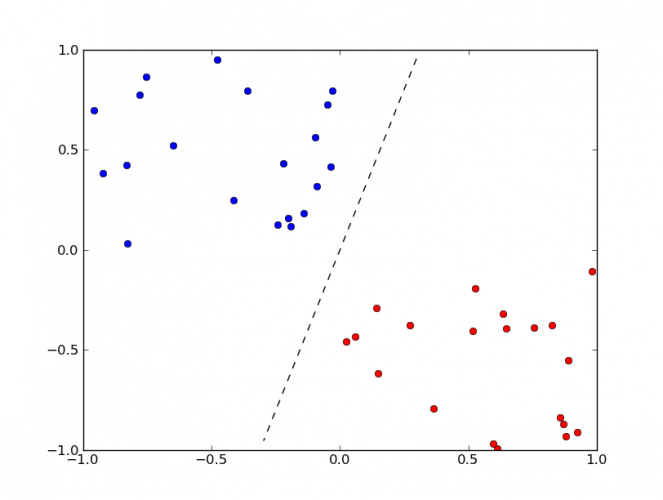

As well as having a name straight out of Star Trek, the perceptron is an incredibly powerful discovery. Labeled as a linear classifier, a perceptron is simply a single layer neural network that takes inputs and splits its data into two.

A perceptron classifies its data into two clusters

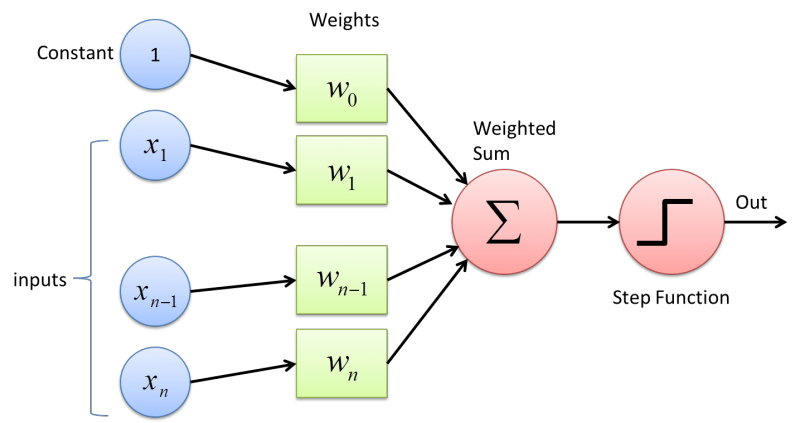

A perceptron takes input, prescribes weights and biases to output a binary sum

What happens beneath the surface is slightly more complicated. The Perceptron models a human brain neuron by taking in multiple units and firing a signal if that input meets a certain criteria.

While the perceptron itself didn’t accomplish much on its own in the field of artificial intelligence, it’s predecessors did. Researchers built on Rosenblatt’s invention and used it to create Multilayer perceptrons (MLPs) which compose the structure of neural networks today.

Autoencoders and The Restricted Boltzmann Machine

The perceptron lead to the invention of a network called a Restricted Boltzmann machine (RBM). Unfortunately, it’s not a Frankenstein era device, but instead a two-layer neural network that identifies a probability distribution over its inputs. Developed in 1986 by Paul Smolensky, they became famous in the mid 2000’s when researcher Geoff Hinton discovered an algorithm called contrastive divergence allowing RBMs to learn quickly from their inputs.

Today, RBMs are primarily used as part of a class called autoencoders which are algorithms that automatically determine the representation of a data set. Because autoencoders are unsupervised learning algorithms, they are able to find patterns in unlabeled data sources such as photos, videos, voices, and sensor data.

This is incredibly useful for say, interpreting the results of an IOT sensor and determining the impacts for a system failure. Similarly, autoencoders can decode the inputs and differentiate a smiling emoji from a crying emoji. Thus, they are integral components to larger neural networks as building blocks for making determinations based off data.

Like the perceptron, the RBM is much more powerful when combined into multiple layers. This allows each different layers to specialize in a unique component of the identification process. When combined as such, the model is referred to as a Deep Belief Network, or DBN, which is an integral component in artificial intelligence research and shows similar efficacy in identification tasks to Convolutional Neural Networks.

Sources

Larochelle, Hugo, and Yoshua Bengio. "Classification using discriminative restricted Boltzmann machines." Proceedings of the 25th international conference on Machine learning. ACM, 2008.

Hinton, Geoffrey E. "A practical guide to training restricted Boltzmann machines." Neural networks: Tricks of the trade. Springer, Berlin, Heidelberg, 2012. 599-619.

Salakhutdinov, Ruslan, Andriy Mnih, and Geoffrey Hinton. "Restricted Boltzmann machines for collaborative filtering." Proceedings of the 24th international conference on Machine learning. ACM, 2007.

Rosenblatt, Frank. "The perceptron: a probabilistic model for information storage and organization in the brain." Psychological review 65.6 (1958): 386.

Hinton, Geoffrey E. "Deep belief networks." Scholarpedia 4.5 (2009): 5947.

Haykin, Simon. Neural networks: a comprehensive foundation. Prentice Hall PTR, 1994.