Machine learning has changed, and will continue to change the world around us. Over the course of November, I spent time completing an online Lynda course titled, Deep Learning: Image Recognition by Adam Geitgey. In this course, I learned key concepts about neural networks and built on what I learned in last month’s machine learning course. By the end of this course, I was able to get a toy neural network working and understanding, at a basic level, the different components that play a part in making it function.

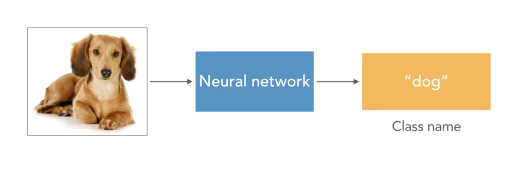

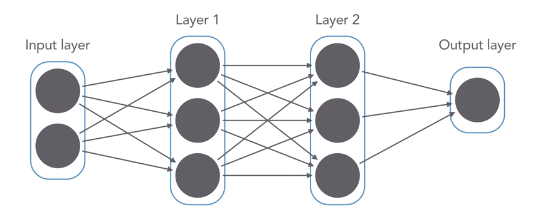

Geitgey introduces neural networks by first covering how image classification works. He defines image recognition as, “the ability for computers to look at a photograph and understand what’s in the photograph.” The computer is able to do this through a system of individual nodes which are called neurons. The neurons are arranged into groups with other neurons and these groups form what are called layers. Each individual node will take information in, conduct a mathematical calculation, and then pass the information on to the next layer. Through this process the neural network is capable of handling the complex calculations involved in recognizing images.

The course then begins to walk through how you will build the neural network using a framework called Keras. Geitgey explains how the next components of densely connected layers and standard activation functions are easy to implement using the Keras framework. A densely connected layer is simply the jargon phrase that explains how each node in one layer is connected to every node in the next layer. Activation functions handle the process of deciding which inputs emerging from each layer are most important and deserve being passed into the next layer. In this example using Keras, we use a relu activation function to build our neural network. Next will come the optimizer function and the loss function. The optimizer function is the part that will train the data. The loss function is how the neural network decides how accurate the calculation is.

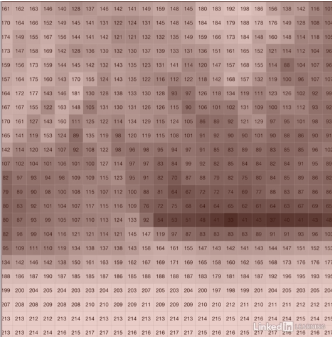

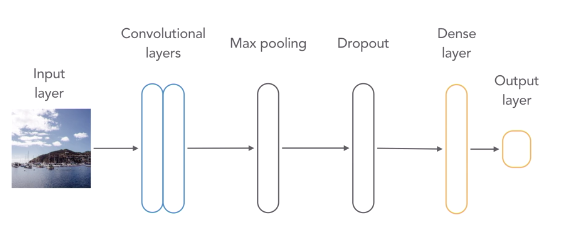

The process of identifying what an image actually is requires the processing of a tremendous amount of data. Neural networks use the color gradient of the picture to calculate where the image contains an object and what that object likely is. This process is done through using individual pixels’ RGB scale. In order to recognize what an object is, the nodes in the first layers seek to identify simple aspects while nodes in deeper layers look for more specific features. We then couple this with a convolutional layer. This layer will allow our model to account for the fact that an object may occur in any part of the image. he convolutional layer breaks apart the image into seperate parts and then decides whether a pattern is emerging in any part of the photo.

The next steps of the course involve actually building your own neural network. Much of this content is interesting and follows basic premises that can be found in introductory machine learning courses. During this process of building the model, Geitgey does recommend including max pooling and dropout in order to increase the program’s efficiency and accuracy. Max pooling is the process of keeping only the largest values that emerge from the convolutional layer and getting rid of everything else. This allows the program to run less data in the next steps and in turn, increases efficiency. Dropout is a common aspect of all machine learning models. In this neural network, it represents deleting random information after the max pooling layer we just added. Doing this helps prevent the program from just memorizing the data. This is important because we want the model to work with any images, not just those passing into it currently.

The rest of the online course was very technical as you walk through the actual programming behind creating a neural network. If the specifics are interesting, I strongly recommend taking time to look at the course on your own. Ultimately, the course does a good job of both explaining key concepts that are necessary to understand neural networks and providing a tutorial about the specific programming required for building a toy model. In the end, this course provided a solid introduction to neural networks without requiring copious readings or lectures.